The Cloud Will Break. Will Your Systems?

Cloud outages are part of the operating environment now. That’s not news.

On November 18, 2025, a Cloudflare outage disrupted access to major platforms, including X and ChatGPT. In October 2025, AWS and Azure both experienced incidents that rippled into thousands of downstream services.

When these platforms go down, your options are limited. You wait. You communicate. You recover when the provider recovers.

That part is unavoidable.

The real risk usually isn’t upstream. It’s internal. And it’s almost always self-inflicted.

Most teams spend their energy worrying about outages they can’t control while ignoring the failure paths they own. External outages expose systems. Internal weaknesses take them down.

Where Teams Actually Get Hurt

Most serious incidents don’t start with a cloud provider. They start with unverified assumptions.

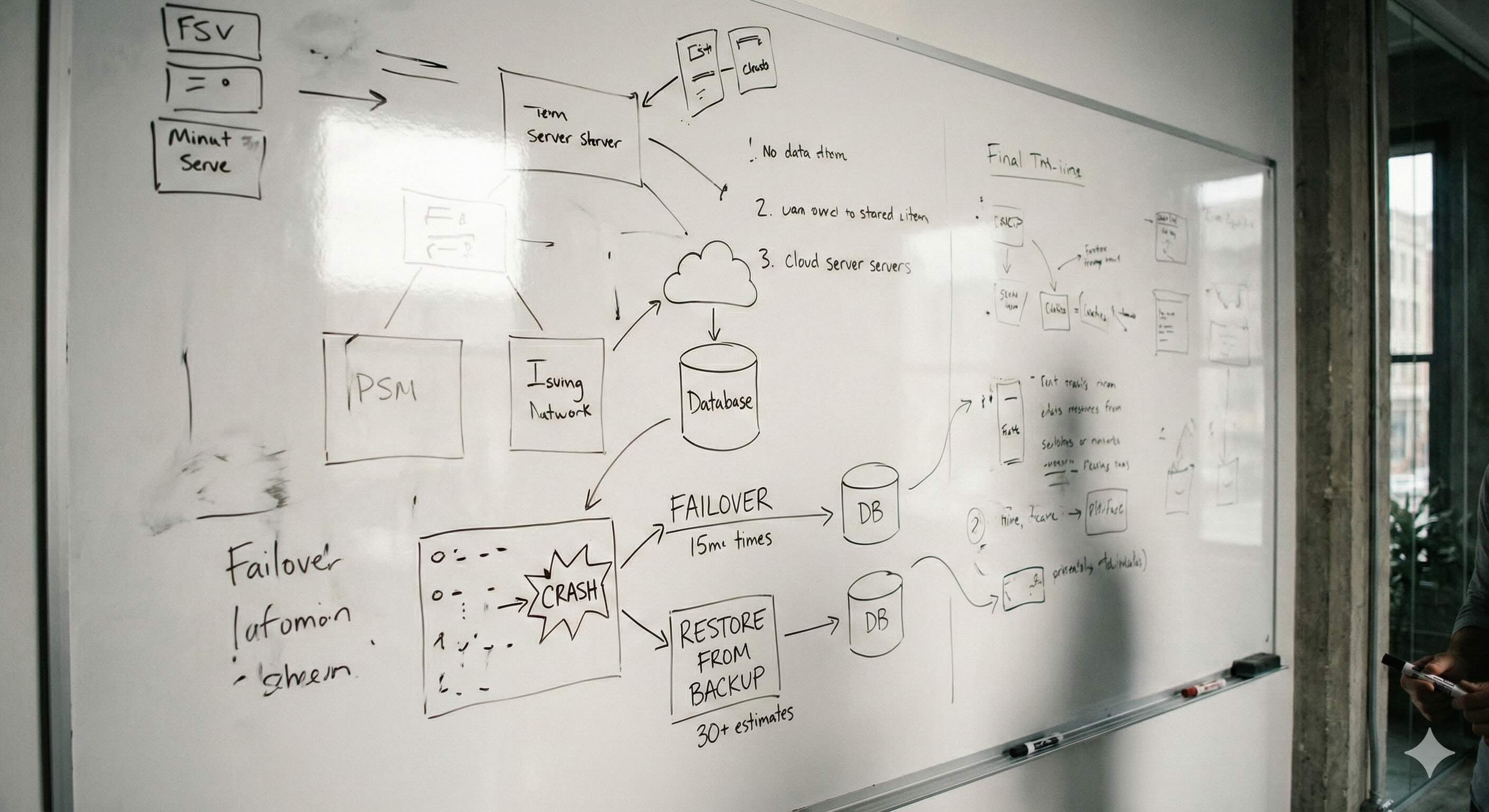

- A backup exists, but no one has restored it under real conditions.

- A recovery path works in a test environment but collapses at production scale.

- Credentials haven’t been rotated because nothing bad has happened yet.

- Monitoring fires alerts but doesn’t tell anyone what actually broke—or who owns fixing it.

None of this feels urgent in isolation, but together, it turns a routine upstream outage into a prolonged business incident.

We’ve seen this pattern repeatedly when initially engaging with enterprise clients.

The primary database restore has never been tested at production scale. The backup exists. The restore path fails. What should be a short disruption stretches into hours or days of downtime while teams scramble to debug something they assumed worked.

By the time the cloud provider has fully recovered, the internal outage is just getting started.

A Quick Reality Check for Leaders

If any of the questions below make you uncomfortable, you're not alone, but you're ask.

When was the last time your team restored a full production backup end to end?

Have you ever watched your system degrade under partial failure, or only seen it fully up or fully down?

Do database writes consistently succeed or fail as a unit, or can partial state leak through?

During an incident, is it obvious who is in charge, what decisions they can make, and what success looks like?

If a major dependency failed tonight, would your system pause cleanly—or keep half-working and corrupting itself?

Most teams can’t confidently answer these. Not because they’re careless—but because no one forced the system to prove it.

Hardening What You Actually Control

Reliable systems rehearse failure.

Teams that recover quickly do the unglamorous work early:

- Restore backups instead of trusting that they exist

- Validate recovery paths under real load

- Rotate credentials on schedule, not after a scare

- Reduce blast radius instead of assuming perfect behavior

- Establish ownership before something breaks

- Practice incident response while the stakes are low

None of this prevents external outages. That isn’t the goal.

The goal is simple: When something outside your control fails, your system doesn’t make it worse.

The Leadership Difference

Outages create uncertainty. What happens next depends on leadership.

Strong leaders assume outages will happen. They’re explicit about which risks matter, which don’t, and how the organization responds when something breaks upstream.

They communicate early and clearly.

What’s known. What isn’t. What happens next.

That clarity keeps teams focused and prevents panic-driven decisions that cause more damage than the outage itself.

The teams that hold up under pressure aren’t the ones who tried to design failure out of the system.

They’re the ones who made sure failure **stopped where it started**.

What to Do If This Hit Close to Home

If this article made you uneasy, that’s not a bad thing. It means you’re asking the right question:

“Would our system fail cleanly—or cascade?”

The fastest way to answer that isn’t another document or audit.

It’s a focused, senior-level review of your real failure paths:

- What breaks first

- What breaks next

- What should stop but doesn’t

- And where assumptions are quietly doing the most damage

That’s the work we do with companies like yours when the cost of being wrong is high.

.jpg)